Artificial Intelligence (AI) is a fascinating field that allows machines to perform tasks that typically require human intelligence. Among the many innovations in AI, Retrieval-Augmented Generation (RAG) and Large Language Models (LLMs) stand out as powerful tools for generating human-like text and providing accurate information. This article will explore these concepts in detail, breaking them down into simple terms, much like explaining them to a five-year-old.

What is RAG (Retrieval-Augmented Generation)?

Retrieval-Augmented Generation (RAG) is a special way of using AI to make sure that when machines answer questions or create text, they do it using the most up-to-date and relevant information. Imagine you have a super-smart robot friend who can answer any question. But instead of just guessing or using old information, this robot can also look up the latest facts before answering. That’s what RAG does!

Diagram of RAG

User Prompt → Retrieval Model → Knowledge Sources → LLM → Response

1. User Prompt: You ask a question.

2. Retrieval Model: The system looks for the best answers from its knowledge sources.

3. Knowledge Source: These are databases or documents that have the latest information.

4. LLM: The Large Language Model takes the retrieved information and forms a complete answer.

5. Response: The robot friend gives you an answer!

What Does RAG Do?

RAG helps improve the accuracy of responses generated by LLMs. By combining retrieval methods with generative models, RAG ensures that the answers are not only coherent but also factually correct and contextually relevant.

Example

Let’s say you ask, “What’s the latest news about space exploration?”

- – Without RAG, the robot might give you outdated information based on its last training session.

- – With RAG, it looks up recent articles or databases about space exploration and gives you the most current news.

How Does RAG Work?

RAG works through a series of steps:

1. User Query: You type in your question.

2. Data Retrieval: The system searches its databases for relevant information.

3. Contextual Prompting: It combines your question with the retrieved data to form a better context.

4. Response Generation: The LLM uses this enriched context to generate a more accurate answer.

Diagram of RAG Process

User Query → Retrieve Relevant Data → Combine with Query → Generate Response

Who Uses RAG?

Many industries use RAG to enhance their AI applications:

- – Customer Support: Companies use RAG to provide accurate answers to customer inquiries quickly.

- – Healthcare: Medical professionals can access updated research while consulting with patients.

- – Education: Students can get reliable information for their assignments by querying educational databases.

Why is RAG Important?

RAG is essential because it addresses two significant challenges:

1. Hallucinations: Sometimes, LLMs make up answers that sound plausible but are incorrect. RAG reduces this by providing real data.

2. Outdated Information: Traditional LLMs are trained on fixed datasets, which means their knowledge can become stale over time. RAG keeps the information fresh and relevant.

What is LLM (Large Language Model) ?

A Large Language Model (LLM) is a type of AI designed to understand and generate human language. Think of it as a giant library filled with books about everything! It learns from vast amounts of text data to understand how words fit together.

How Does an LLM Work?

1. Training Phase: The model reads tons of text from books, websites, and articles to learn language patterns.

2. Inference Phase: When you ask it something, it uses what it learned to generate an answer.

Diagram of LLM Functioning

Training Data → LLM Learning → User Query → Generate Answer

Who Uses LLMs?

LLMs are widely used across various sectors:

– Content Creation: Writers use LLMs to help generate ideas or even full articles.

– Translation Services: They help translate languages accurately and quickly.

– Chatbots: Many customer service bots rely on LLMs to interact with users effectively.

Why Are LLMs Important?

LLMs are crucial because they enable machines to communicate in ways that feel natural to humans. They can assist in numerous tasks, making our lives easier and more efficient.

What Else Should You Know About RAG and LLMs?

To be knowledgeable about RAG and LLMs, consider these additional points:

1. Integration Challenges: Combining retrieval systems with language models can be complex but leads to better performance.

2. Computational Resources: Running these models requires significant computing power, especially when processing large datasets.

3. Future Trends: The field is rapidly evolving, with ongoing research aimed at improving accuracy and efficiency.

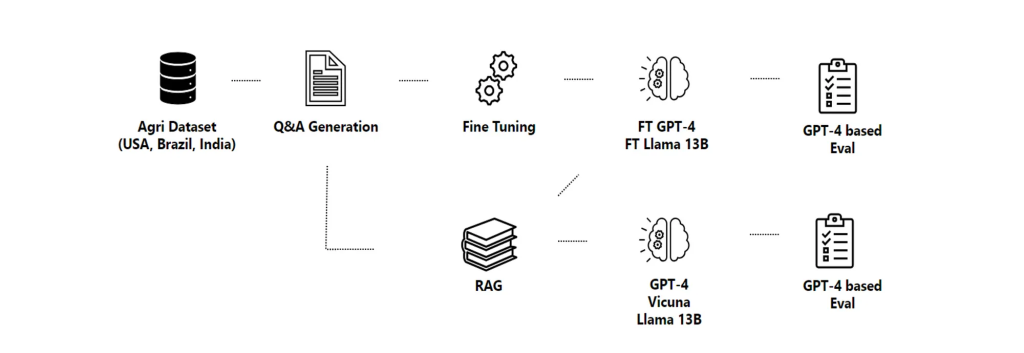

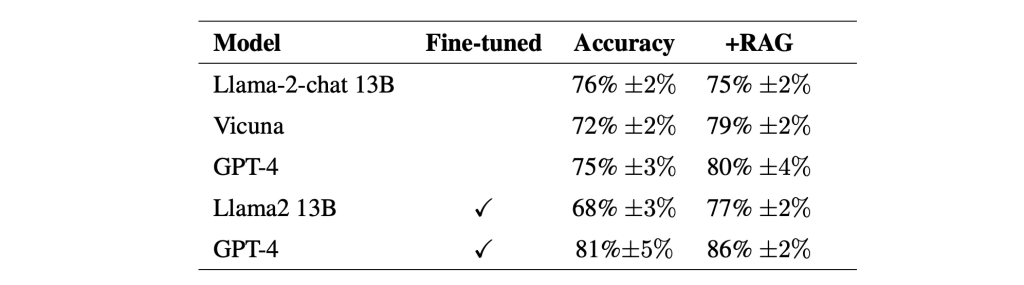

Accuracy

The table below compares the accuracy from tests with no fine-tuning, with fine-tuning, and with RAG added. Considering GPT-4, the accuracy goes from 75% to 81% after being fine-tuned on domain specific information.

And then when RAG is added, the accuracy in the case of GPT-4 goes up to 86%.

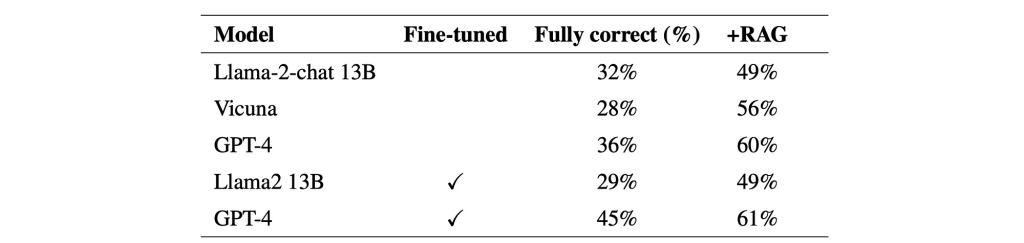

The table shows the percentage of answers that were fully correct, for base and fine-tuned models with and without RAG. Again just considering GPT-4, the leap in accuracy with fine-tuning is almost 10%, with RAG adding 16% accuracy.

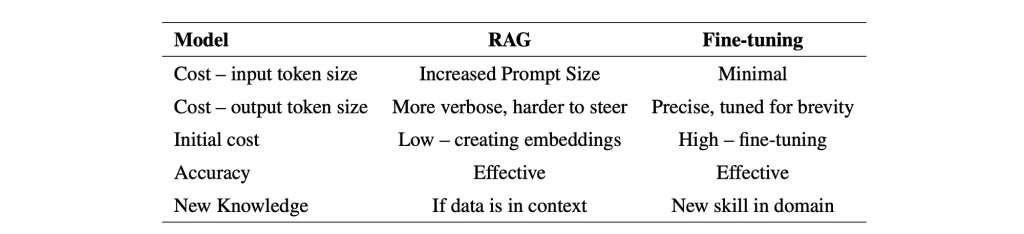

When to Use RAG vs LLMs

The choice between using RAG or relying solely on LLMs often depends on the specific requirements of the task at hand:

- Use RAG when:

- Factual accuracy is paramount (e.g., healthcare chatbots).

- The task involves retrieving specific information from large datasets.

- Up-to-date knowledge is critical for the application.

- Use LLMs when:

- The task requires creative generation or conversational abilities.

- Flexibility in handling diverse queries without needing extensive setup.

- The context is manageable within token limits without needing external data.

Difference between LLM & RAG

LLMs are standalone generative models that produce text based on patterns learned during training, without real-time access to external data.

RAG enhances LLMs by incorporating a retrieval mechanism that fetches relevant, up-to-date information from external sources to inform the generation process.

Comparative Analysis

| Feature | RAG | LLMs |

|---|---|---|

| Functionality | Augments responses with retrieved info | Generates responses based on learned patterns |

| Use Case | Ideal for fact-based queries requiring up-to-date knowledge | Suitable for creative tasks and general queries |

| Cost Efficiency | More efficient in token usage | Can be costly with long context inputs |

| Response Accuracy | Higher due to external information | Dependent on training data; may hallucinate |

| Complexity | Requires setup of retrieval mechanisms | Simpler prompt-based interaction |

Conclusion

In summary, Retrieval-Augmented Generation (RAG) and Large Language Models (LLMs) represent exciting advancements in AI technology. By understanding how they work together, we can appreciate their roles in creating more accurate and relevant responses in various applications—from customer service to education.

As we continue exploring these technologies, remember that they are tools designed to assist us in navigating the vast sea of information available today—making our interactions with machines more meaningful and productive!

Citations:

[1] https://www.k2view.com/what-is-retrieval-augmented-generation

[2] https://www.superannotate.com/blog/rag-explained

[3] https://www.bentoml.com/blog/building-rag-with-open-source-and-custom-ai-models

[4] https://stackoverflow.blog/2023/10/18/retrieval-augmented-generation-keeping-llms-relevant-and-current/

[5] https://aws.amazon.com/what-is/retrieval-augmented-generation/?nc1=h_ls

[6] https://techinnovate360.com/what-is-rag-in-ai-importance-of-rag/