Grok Medical Analysis, Elon Musk has introduced yet another groundbreaking concept through his AI company, xAI. Grok, xAI’s advanced AI chatbot, is gaining attention for its unique data collection drive that urges users to submit medical scans. This initiative aims to train Grok in understanding and analyzing complex medical data, with aspirations to provide support in medical diagnostics. However, the proposal has been met with both excitement and skepticism due to potential privacy and ethical concerns

Background of Grok and xAI

xAI, Elon Musk’s venture into artificial intelligence, was founded to push the boundaries of what AI can achieve. With a mission to address complex problems, xAI’s Grok serves as a chatbot designed to perform advanced data analysis. One of its focuses is medical imaging, where the AI aims to assist in areas like diagnostic support and predictive health analysis.

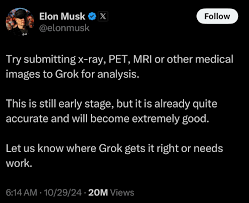

Grok Medical Analysis Image Collection Drive

Recently, Musk encouraged users on social media to submit various medical scans—including X-rays, PET, and MRI images—to Grok. By analyzing these images, Grok could improve its understanding of intricate health data, setting the stage for more responsive diagnostic tools in healthcare. This call for data is part of xAI’s broader vision to fine-tune Grok’s capabilities to provide real-world medical insights.

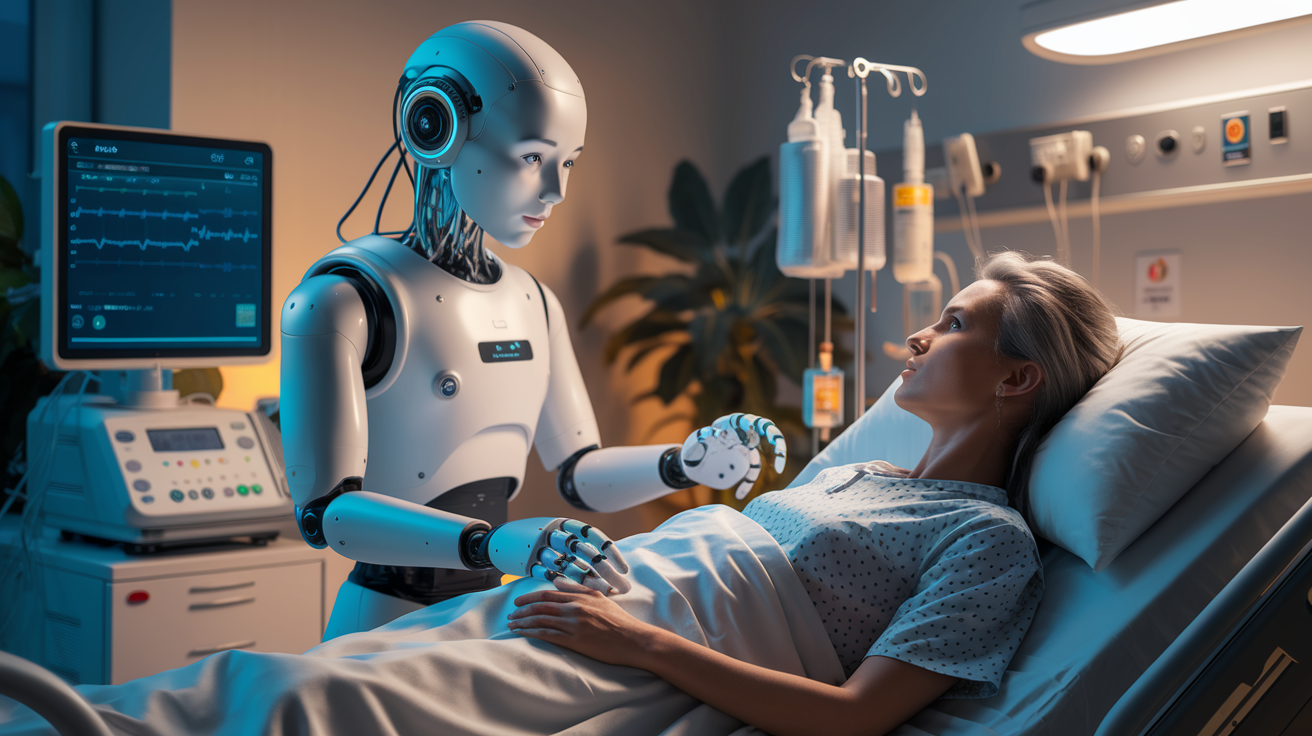

The AI in Diagnostic Support

AI’s potential in interpreting medical images is immense. Grok’s capabilities could potentially support doctors in spotting patterns that might otherwise be missed. With advancements in AI-driven analysis, tools like Grok could analyze massive datasets, potentially revealing insights at speeds unattainable by human professionals alone.

Musk’s Vision for AI in Healthcare

Elon Musk’s larger vision includes revolutionizing healthcare diagnostics with AI. If Grok succeeds, it could serve as a digital assistant for healthcare providers, aiding in diagnostics and reducing the time needed for patient evaluations. This aligns with Musk’s goal of pushing AI to solve critical issues in fields such as healthcare, energy, and beyond.

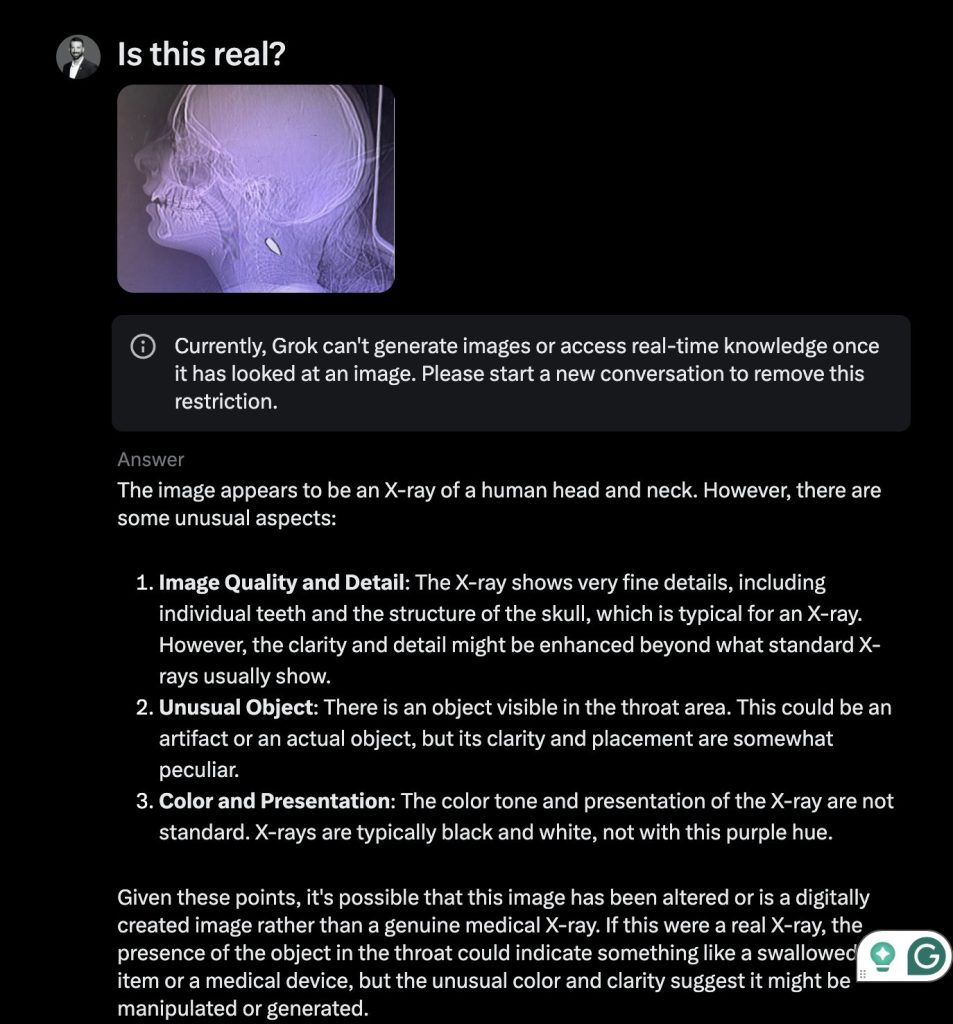

Ethical and Privacy Concerns

Musk’s initiative, however, is not without controversy. Many have voiced concerns about the ethics of encouraging users to submit private medical scans. This practice raises significant questions about data privacy, consent, and the security of highly personal medical information. Critics argue that without robust privacy measures, Grok’s data collection may risk exposure of sensitive health data.

Community Response and Criticism

Healthcare professionals and privacy advocates have reacted with caution. While some see value in AI advancements in healthcare, others worry about the potential for data misuse. The public’s feedback on this initiative has been mixed, with calls for transparency in data handling and storage processes.

Potential Risks of Data Submission

Sharing sensitive medical data online can be risky. Critics highlight the vulnerability of private health information, which, if mishandled, could be used maliciously or even sold to third parties. xAI faces pressure to ensure strong cybersecurity measures to protect users who choose to submit their scans.

Privacy Protections Claimed

In response to these concerns, xAI has outlined privacy measures it claims will protect user data. The company emphasizes secure encryption, limited data access, and compliance with privacy regulations to maintain user trust. Whether these measures will be enough to alleviate public concern remains to be seen.

Future Implications of AI in Healthcare

The integration of AI in healthcare, if managed responsibly, could significantly impact diagnostics, patient care, and health monitoring. As Grok and similar tools evolve, their potential to assist healthcare professionals could transform the industry, enabling more personalized and data-driven treatment options.

Comparing Grok to Existing Medical AI Tools

AI tools in medical diagnostics are not new; various AI-driven platforms already analyze imaging data to assist doctors. However, Grok’s data-centric approach and Musk’s influence bring a fresh angle to the industry. Unlike other tools, Grok aims to be a comprehensive AI diagnostic assistant.

Challenges Facing Grok’s Implementation

Despite its promise, Grok faces significant challenges, including data accuracy, ethical considerations, and legal regulations around medical data usage. The technical demands of securely storing and analyzing vast medical datasets will require sophisticated solutions, while regulatory frameworks may limit Grok’s deployment.

Potential Advantages of Grok’s Success

If Grok achieves its goals, it could streamline diagnostic processes, enhance precision, and support early disease detection. For healthcare providers, Grok could become an invaluable tool, especially in resource-limited settings where specialist access is scarce.

Here are three main differences between Grok, Claude, ChatGPT, and Perplexity:

- Core Functionality:

- Grok: Focuses on advanced AI with potential medical diagnostic applications, particularly in processing complex data like medical scans.

- Claude: Prioritizes ethical and human-centered AI, often excelling in understanding nuanced contexts for conversation.

- ChatGPT: Offers a versatile conversational AI with strong general-purpose capabilities across a wide range of topics.

- Perplexity: Optimized for information retrieval and concise answers, pulling from diverse sources.

- Privacy and Security:

- Grok: Raises privacy concerns, especially with medical data collection.

- Claude: Designed with enhanced privacy protocols, respecting user-centric data security.

- ChatGPT: Focuses on user privacy but may be less specialized in secure data for sensitive domains.

- Perplexity: Known for quick, open-source referencing, prioritizing accessibility over specific data privacy protocols.

- Specialization and Use Cases:

- Grok: Ideal for specialized applications like medical image analysis.

- Claude: Excellent for emotionally intelligent or ethically sensitive interactions.

- ChatGPT: Best for general Q&A, creative writing, coding support, and more.

- Perplexity: Suited for quick fact-checking and informational lookups, making it a resourceful research tool.

Do read why AIPRM is not the GOAT anymore

Conclusion

Elon Musk’s Grok holds exciting potential in medical diagnostics, yet it also brings substantial ethical and privacy challenges. While the tool’s innovative approach could benefit healthcare, xAI must address security concerns for widespread acceptance. The journey of Grok, like many AI ventures, hinges on its ability to align technological progress with ethical responsibility.

FAQs

- Why does Elon Musk want users to submit medical scans to Grok?

xAI aims to improve Grok’s diagnostic abilities by training it on diverse medical images. - What types of scans is Grok requesting?

Users are encouraged to submit X-rays, PET scans, and MRI images. - Are there privacy risks in submitting medical scans to Grok?

Yes, there are privacy concerns, as sharing medical data online could expose personal information. - How does xAI plan to protect user data?

xAI claims to use encryption and privacy measures to secure user data and limit access. - What sets Grok apart from other medical AI tools?

Grok’s unique data-driven design and its role under Musk’s xAI give it a distinct approach in the medical AI landscape.